1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

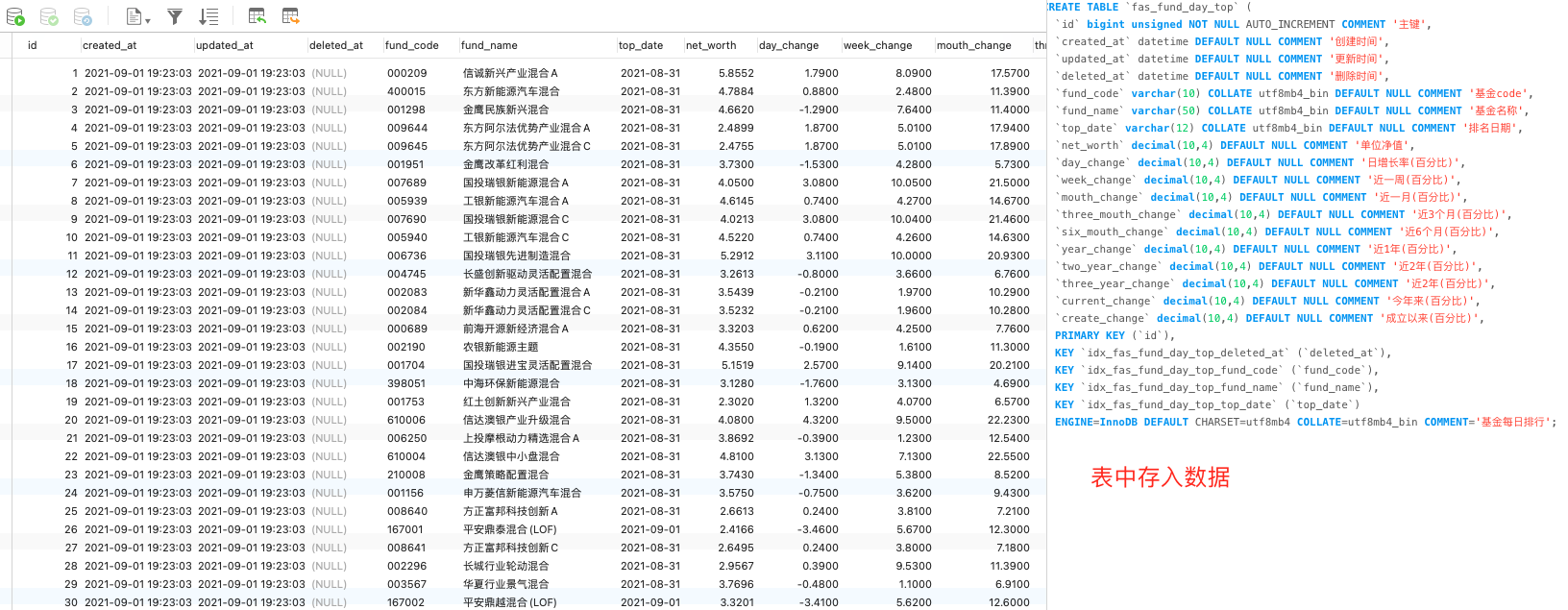

// ConvertEntity 格式化类型

func (f *TopCrawlService) ConvertEntity() []entity.FundDayTop {

var topList []entity.FundDayTop

for _, item := range f.Item {

if item.FundCode == "" {

continue

}

fundTmp := entity.FundDayTop{}

fundTmp.FundCode = item.FundCode

// 格式化日期

format := time.Now().Format("2006")

fundTmp.TopDate = fmt.Sprintf("%s-%s", format, item.TopDate)

// 转换编码

fundTmp.FundName, _ = utils.GbkToUtf8(item.FundName)

// 字符串转浮点型

fundTmp.NetWorth, _ = strconv.ParseFloat(item.NetWorth, 64)

fundTmp.DayChange, _ = strconv.ParseFloat(item.DayChange, 64)

fundTmp.WeekChange, _ = strconv.ParseFloat(item.WeekChange, 64)

fundTmp.MouthChange, _ = strconv.ParseFloat(item.MouthChange, 64)

fundTmp.ThreeMouthChange, _ = strconv.ParseFloat(item.ThreeMouthChange, 64)

fundTmp.SixMouthChange, _ = strconv.ParseFloat(item.SixMouthChange, 64)

fundTmp.YearChange, _ = strconv.ParseFloat(item.YearChange, 64)

fundTmp.TwoYearChange, _ = strconv.ParseFloat(item.TwoYearChange, 64)

fundTmp.ThreeYearChange, _ = strconv.ParseFloat(item.ThreeYearChange, 64)

fundTmp.CurrentChange, _ = strconv.ParseFloat(item.CurrentChange, 64)

fundTmp.CreateChange, _ = strconv.ParseFloat(item.CreateChange, 64)

topList = append(topList, fundTmp)

}

return topList

}

|